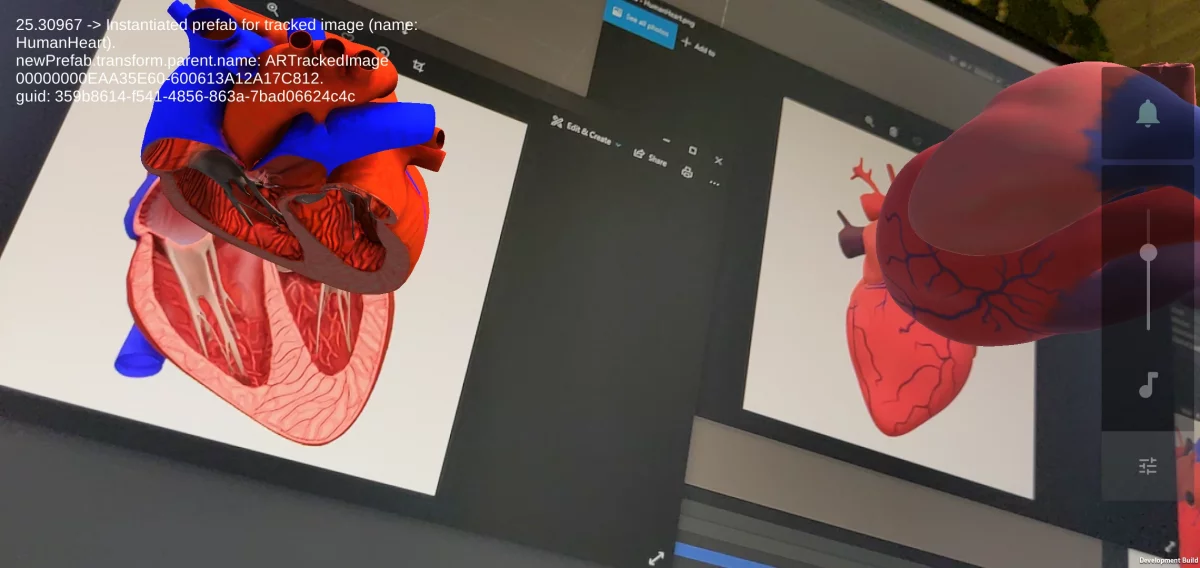

With 2D image tracking, you can create real-life anchors. You need pre-defined markers; Google calls the system Augmented Images. Just point your phone at the image, and your app lets the 3D model immediately appear on top of it.

In the previous part of the tutorial, we wrote Unity scripts so that the user could place 3D models in the Augmented Reality world. A raycast from the smartphone’s screen hit a trackable in the real world, where we then anchored the object. However, this approach requires user interaction and a good user experience to guide users, especially if they’re new to AR.

Please accept YouTube cookies to play this video. By accepting you will be accessing content from YouTube, a service provided by an external third party.

If you accept this notice, your choice will be saved and the page will refresh.

Using 2D Image Tracking

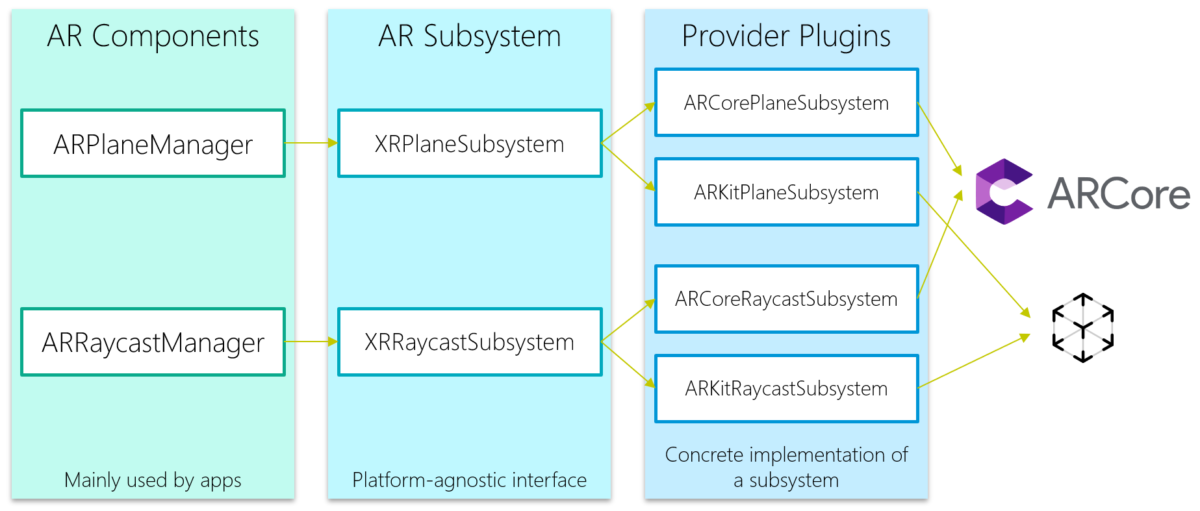

You need to provide reference images, which your app’s users will then encounter in the real world. AR Foundation distinguishes these images and tracks their physical location.

Some usage scenarios where 2D image tracking is helpful:

- Recognition of real-world objects

- Automatically place information on top of objects

- Create an indoor info or navigation system

- Often quicker & easier than plan detection

You must be logged in to post a comment.