In the first part, we took a look at how an algorithm identifies keypoints in camera frames. These are the base for tracking & recognizing the environment.

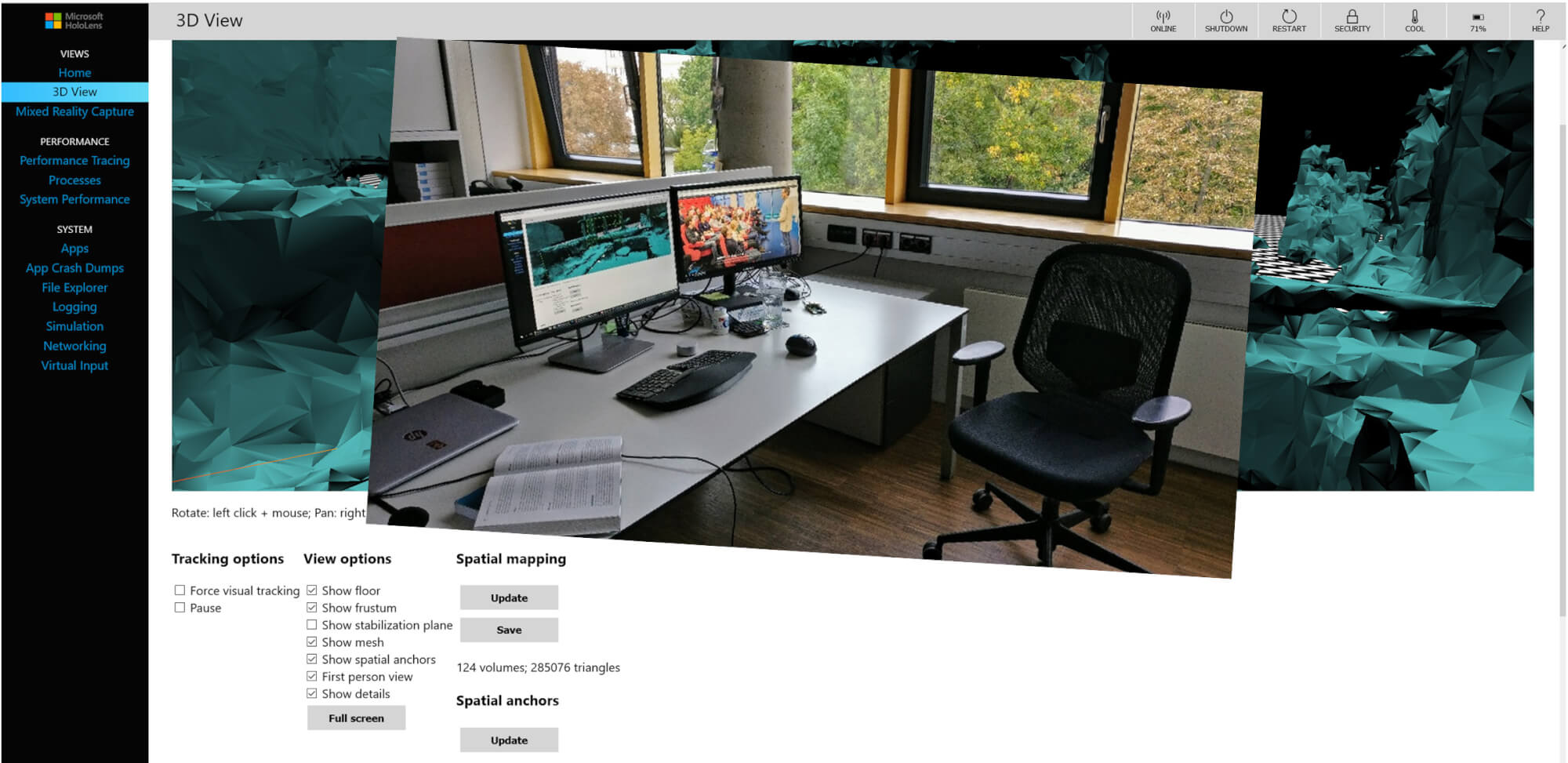

For Augmented Reality, the device has to know more: its 3D position in the world. It calculates this through the spatial relationship between itself and multiple keypoints. This process is called “Simultaneous Localization and Mapping” – SLAM for short.

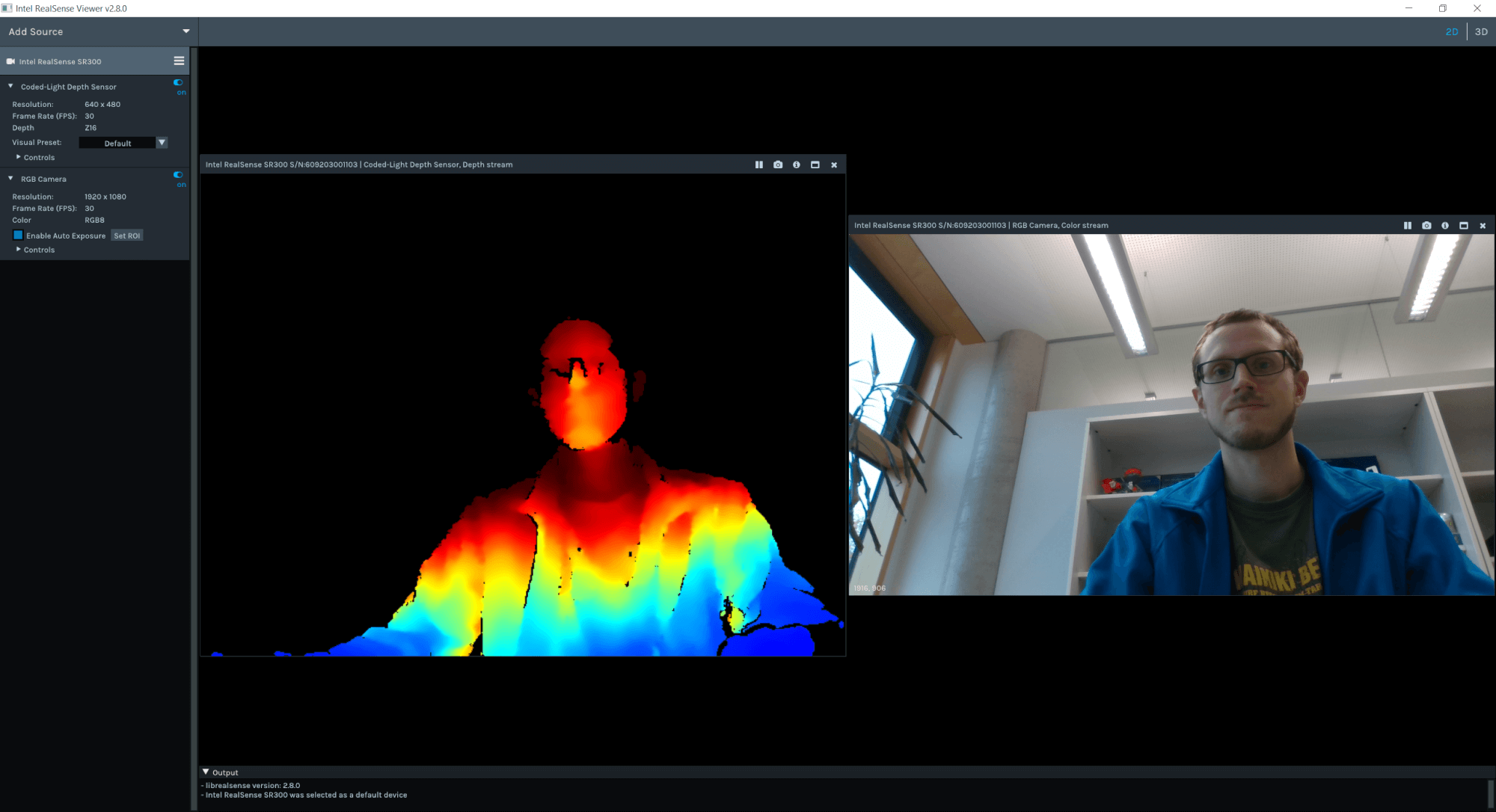

Sensors for Perceiving the World

The high-level view: when you first start an AR app using Google ARCore, Apple ARKit or Microsoft Mixed Reality, the system doesn’t know much about the environment. It starts processing data from various sources – mostly the camera. To improve accuracy, the device combines data from other useful sensors like the accelerometer and the gyroscope.

Based on this data, the algorithm has two aims:

- Build a map of the environment

- Locate the device within that environment