Overall, the AR ecosystem is still small. Nevertheless, it’s fragmented. Google develops ARCore, Apple creates ARKit and Microsoft is working on the Mixed Reality Toolkit. Fortunately, Unity started unifying these APIs with the ARInterface.

At Unite Austin, two of the Unity engineers introduced the new experimental ARInterface. In November 2017, they released it to the public via GitHub. It looks like this will be integrated into Unity 2018 – the new features of Unity 2018.1 include “AR Crossplatfom Kit (ARCore/ARKit API)“.

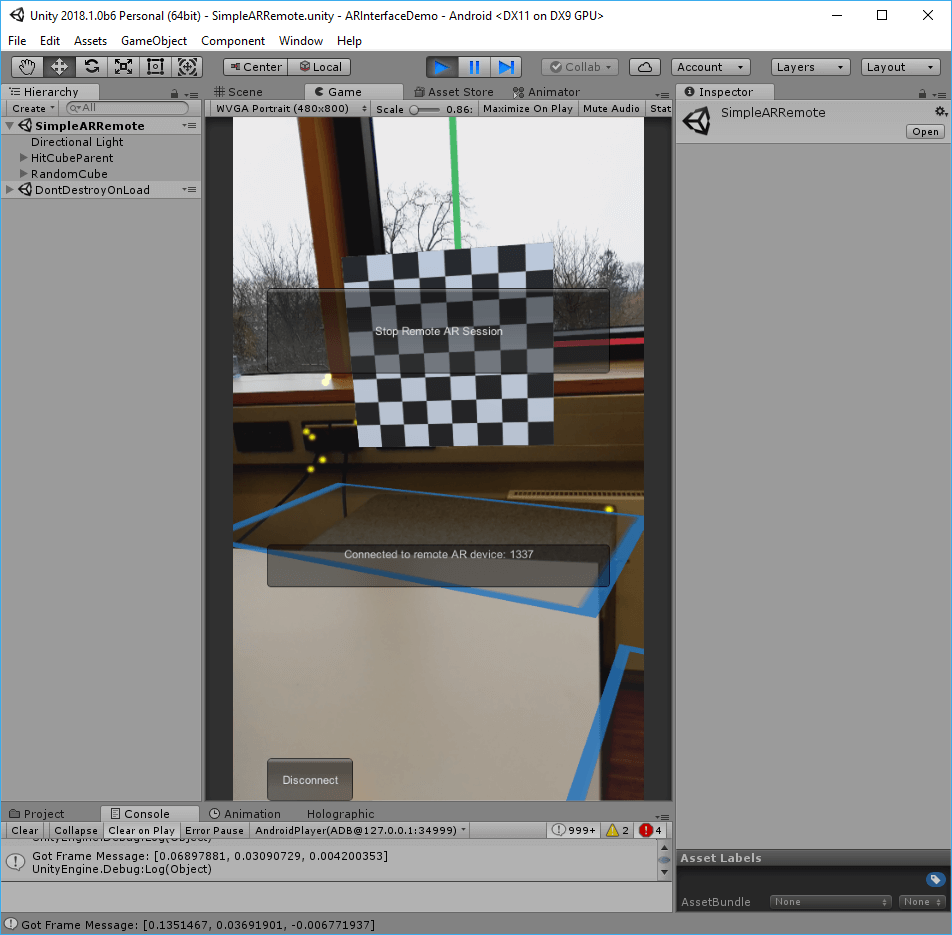

Remote Testing of AR Apps

The traditional mobile AR app development cycle includes compiling and deploying apps to a real device. That takes a long time and is tedious for quick testing iterations.

A big advantage of ARKit so far has been the ARKit Unity Remote feature. The iPhone runs a simple “tracking” app. It transmits its captured live data to the PC. Your actual AR app is running directly in the Unity Editor on the PC, based on the data it gets from the device. Through this approach, you can run the app by simply pressing the Play-button in Unity, without native compilation.

This is similar to the Holographic Emulation for the Microsoft HoloLens, which has been available for Unity for some time.

The great news is that the new Unity ARInterface finally adds a similar feature to Google ARCore: ARRemoteInterface. It’s available cross-platform for ARKit and ARCore.

ARInterface Demo App

In this article, I’ll explain the steps to get AR Remote running on Google ARCore. For reference: “Pirates Just AR” also posted a helpful short video on YouTube.

To explore AR Remote Interfaces, create a new project with the latest version of Unity. I’m using Unity 2018.1 Beta 6. All versions starting with Unity 2017.3.0f3 are compatible.

Even though the APIs & tools might be integrated with Unity soon, right now you still need to clone Unity’s experimental-ARInterface GitHub repository.

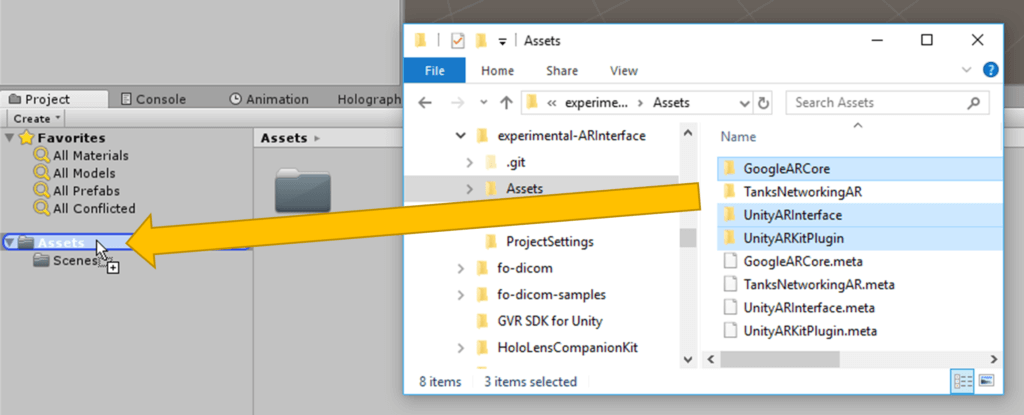

Once your setup is finished, create a new empty 3D project. From the cloned repository, drag the following folders into your Assets in Unity:

- experimental-ARInterface/Assets/UnityARInterface

- experimental-ARInterface/Assets/GoogleARCore

- experimental-ARInterface/Assets/UnityARKitPlugin

You currently need both the Google and Apple SDKs even if targeting only one platform. The common UnityARInterface contains references to both.

The ARInterface repository includes copies of the platform SDKs (ARCore & ARKit), making it more convenient for you. Alternatively, you could get those SDKs directly from the manufacturers – it’s the same code.

- The Google ARCore Unity SDK is on GitHub.

- The Unity ARKit Plug-In is on BitBucket and in the Asset Store.

Configure & Deploy the Remote App for Android

The next step is to get the remote app running on your Android device. The app captures the camera + sensor data and streams it to the Unity Editor on the PC. Make sure your Android phone is compatible to ARCore and install the pre-requisites beforehand.

Open the demo scene for the remote app: Assets > UnityARInterface > ARRemote > RemoteDevice.unity. This contains the necessary code to send the live sensor data from the capturing device.

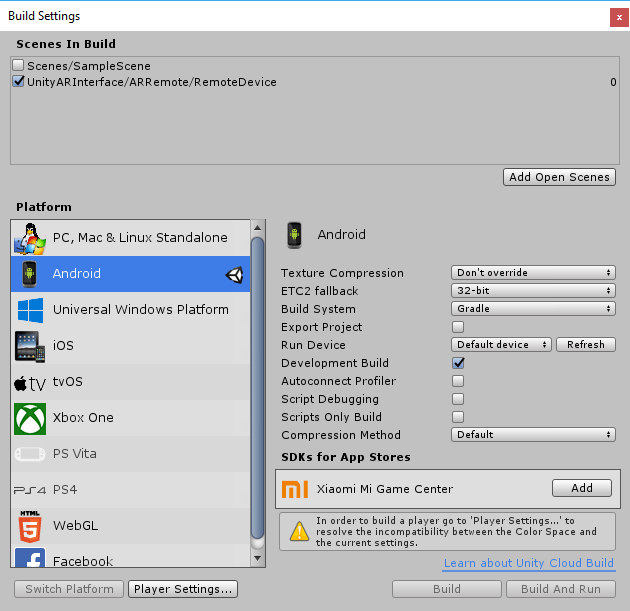

Now, configure the project for Android. Go to File > Build Settings… Select “Android” and click on “Switch Platform”. Make sure the RemoteDevice scene is being deployed – click on “Add Open Scenes”. Disable the “Sample Scene” for the build.

Also, for the remoting to work, enable the “Development Build” setting. This allows the app to send data back to Unity through the PlayerConnection mechanism. Your build configuration should look like this at the end:

Next, go to the Player Settings. Update the following information:

- Company Name

- Product Name

- Other Settings > Rendering > Color Space: Gamma

- Other Settings > Rendering > Multithreaded Rendering: off

- Other Settings > Identification > Package Name

- Other Settings > Identification > Minimum API Level: at least Android 7.0

- XR Settings > ARCore Supported: on

Everything’s ready! Build & Run your app. It’s just a pink screen, waiting for a connection with your Unity PC.

What AR Data does the Phone Send?

In the scene, look for the RemoteDevice > ARSetup game object. It contains the “ARRemoteDevice.cs” script that streams the data from the phone to Unity. The SendToEditor() methods transmit the actual data. Depending on what has been activated, this includes:

- Frame information: camera projection matrix, position, rotation and transform

- Added / updated / removed planes

- Point cloud

- Light estimate

- Camera image in YUY2 format: width, height and the actual Y + UV image data

Running the AR App within the Unity Editor

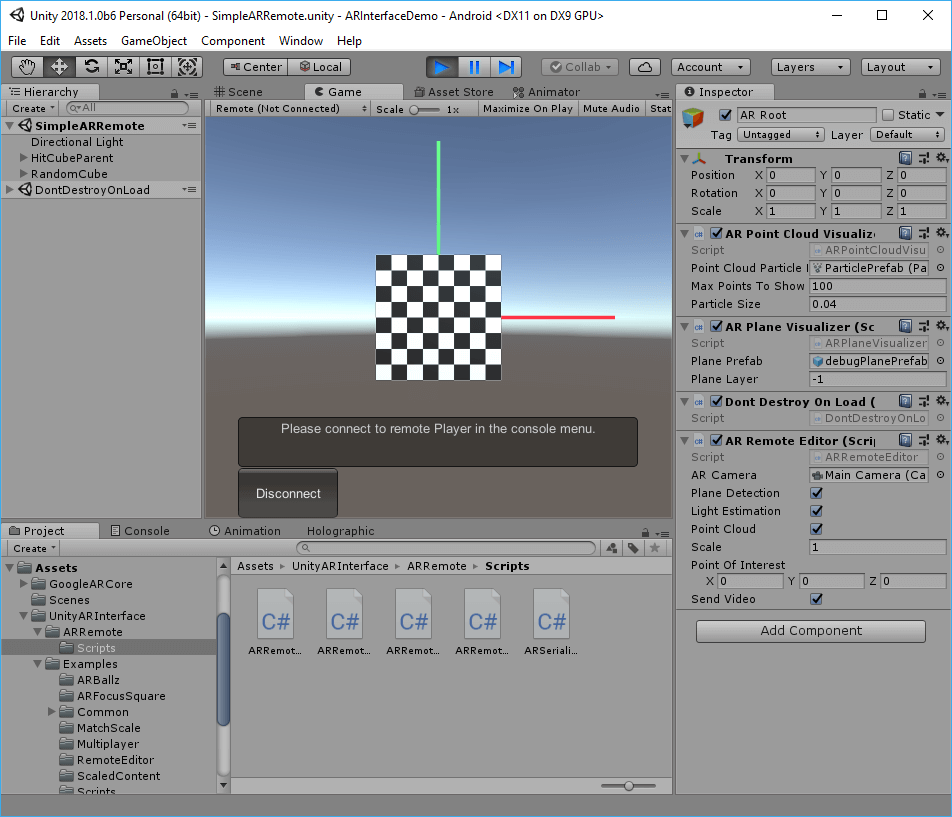

Once the remote app is up and running on your phone, open an AR scene in the Unity Editor. This scene should receive live data from your phone.

The sample project is a good starting point. Open: Assets > UnityARInterface > Examples > SimpleARScene. Within its “AR Root” game object, it includes the “ARRemoteEditor” script. This is the counterpart of the “ARRemoteDevice” script we analyzed before. It subscribes for incoming messages sent by the remote device.

Via implementations of the abstract ARInterface.cs class, the messages are then forwarded to the respective SDK. This could be an implementation for ARKit or ARCore; in our case, it’s AREditorInterface.cs, as we’re running the app in the Unity Editor.

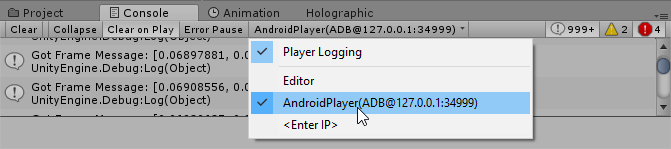

Both the Unity Editor and the phone are now waiting for the connection. The trick is: switch the “Connected Player” menu in the “Console” window to your connected Android phone. By default, the drop-down is set to “Editor”. This setting specifies the target for transmitting profiling and log data.

Once this is ready, both the phone and the Unity Editor recognize the connection. You only need to click the button “Start Remote AR Session” in the Unity Editor.

Make sure you set an aspect ratio in the top left of the “Game” window that corresponds to your phone screen aspect ratio.

In the Unity Editor, you see the live camera image from your phone. In this example, detected planes are shown in blue and point clouds yellow.

AR Remote Editor – Receiving AR Data from the Phone

What’s going on behind the scenes? The SimpleARRemote sample scene has the “ARRemoteEditor.cs” script attached to the “AR Root” game object.

In SetupConnection(), the script registers for callbacks of the various messages sent by the remote client. Let’s take a look at the received information and extend the FrameMessageHandler() method with a simple debug output:

void FrameMessageHandler(MessageEventArgs message)

{

Debug.Log("Got Frame Message: " + message.data.Deserialize<SerializableFrame>().cameraPosition);

m_RemoteInterface.FrameMessageHandler(message);

}

Here, I added the Debug.Log() call to deserialize the incoming message and to extract the transmitted camera position.

In the actual interface implementation for the Unity Editor, the values are then applied to the run-time environment.

Next Steps

It will be interesting to see how the experimental ARInterface from Unity evolves. As the individual frameworks from Google and Apple get more powerful, it needs to keep up. Plus, it’d be great to see HoloLens support added sooner or later.

Unity’s ARInterface is an important first step and will help getting more cross-platform AR experiences to end users!