ARCore has an excellent feature – light estimation. The ARCore SDK estimates the global lighting, which you can use as input for your own shaders to make the virtual objects fit in better with the captured real world. In this article, I’m taking a closer look at how the light estimation works in the current ARCore preview SDK.

Note: this article is based on the ARCore developer preview 1. Some details changed in the developer preview 2 – although the generic process is still similar.

Environmental Light: Prefab

To start with the result: after the calculation of ARCore, our app gets a LightEstimate. This contains a float called PixelIntensity , which is an estimate of the average current lighting conditions. Values range from 0.0 (black) to 1.0 (white). But when and how is this value calculated?

The ARCore Unity SDK contains a prefab for environmental lighting called Environmental Light, which reads the LightEstimate from the Frame and saves it to a global variable (float) called _GlobalLightEstimation . This can be accessed by all shaders to adjust the color of surfaces. More info about setting a global variable for shaders is available in the Unity documentation.

Shader.SetGlobalFloat("_GlobalLightEstimation", colorScale);

How to Calculate the Light Estimate?

ARCore keeps an instance of a “Frame” as an up-to-date entry point for relevant tracked data. This includes the pose, planes & point clouds as well as the light estimate.

The actual update of the light estimate is performed by the LightEstimationManager. In the source code of this class, we already see the Tango naming instead of ARCore.

First, the method grabs the current raw pixel data of the native image. It’s using the first plane, which contains the Y values of an YUV image. That makes sense, as Y corresponds to the luminance information.

UnityTango.NativeImage nativeImage = new UnityTango.NativeImage();

// The Y plane is always the first one.

var yPlaneInfo = nativeImage.planeInfos[0];

IntPtr yPlaneStart = new IntPtr(nativeImage.planeData.ToInt64() + yPlaneInfo.offset);

float intensity;

ApiServiceErrorStatus status = TangoClientApi.TangoService_getPixelIntensity(

yPlaneStart,

(int)nativeImage.width,

(int)nativeImage.height,

nativeImage.planeInfos[0].rowStride,

out intensity);

m_latestLightEstimate = new LightEstimate(intensity);

The raw pixel data is then sent to TangoClientApi.TangoService_getPixelIntensity() . Unfortunately, the source code of this implementation is not available. The Unity SDK actually contains a native android AAR plug-in called lighting_estimation.aar. However, it seems that the SDK is using code from the arcore_preview.apk package. This is the apk that users need to install to compatible devices to enable the ARCore preview. It contains all the magic Augmented Reality code.

So how is the light estimate calculated? It seems to be an average filtered intensity of a subset of the pixel data from the current frame (with a little bit of frame-to-frame smoothing added into the mix). It’s simple: there is no relation to the 3D world or the actual position of the lighting source(s). It simply calculates the average brightness of the whole image and you can adapt the brightness of your own object accordingly.

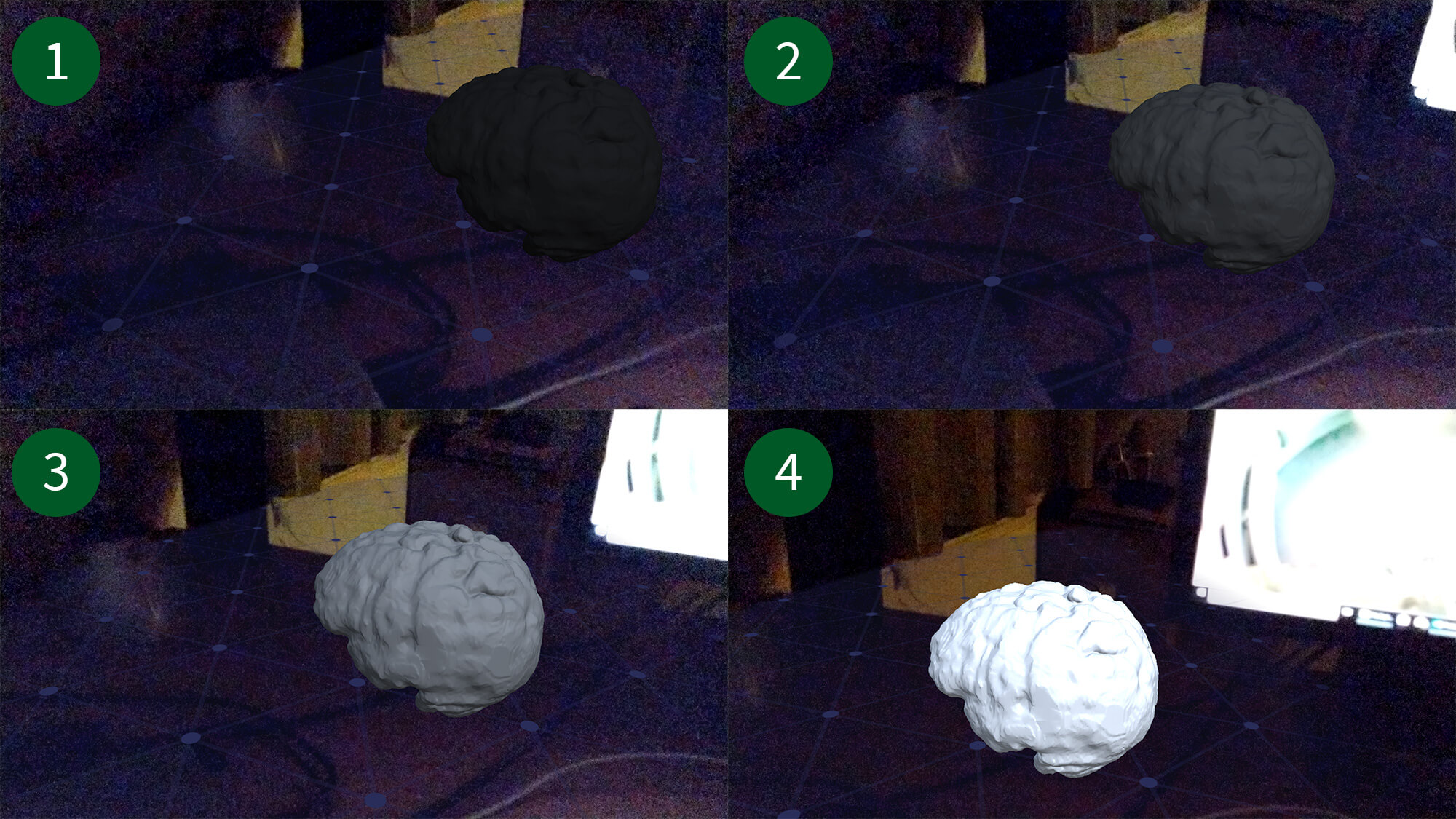

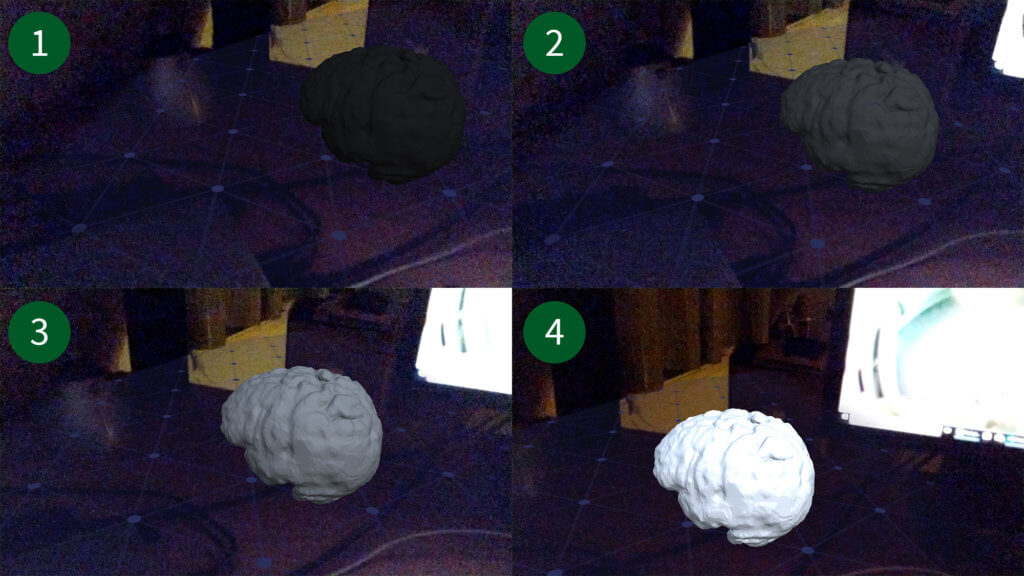

The following image shows 4 individual screenshots and highlights how the light estimation works in practice: the more of the bright monitor is visible in the camera image, the brighter the object gets rendered by the shader. The overall brightness of the room didn’t change; only what the camera captures is different.

Applying the Light Estimate to Your 3D Objects

So, how to apply the light estimate to our 3D object?

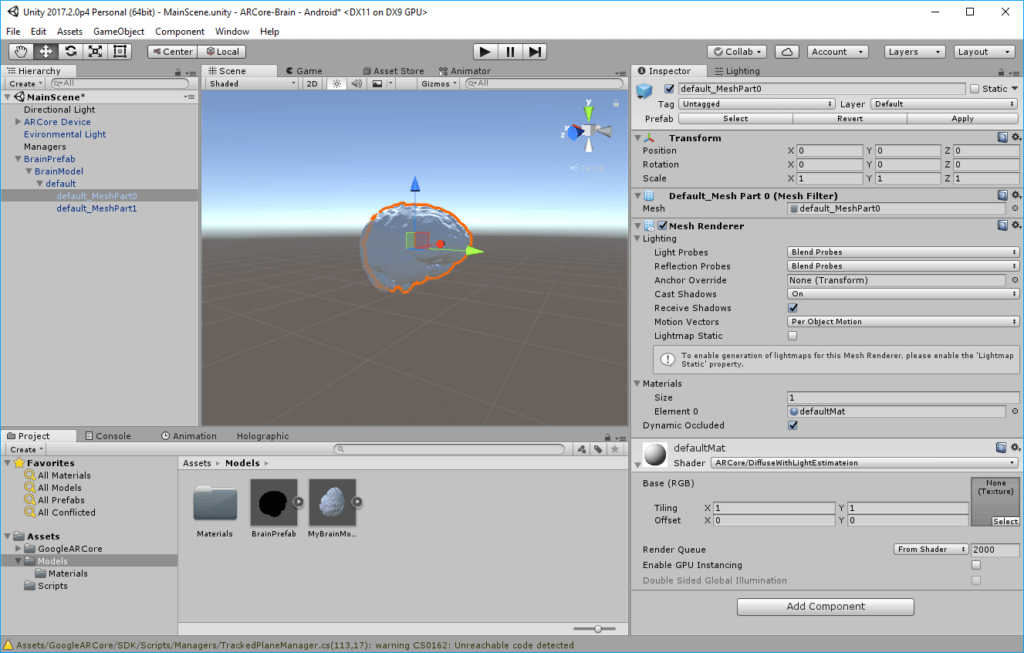

The scene should have a light source – the example app uses a directional light with an intensity of 0.7. The current ARCore preview doesn’t contain any recommended settings. However, as ARCore is a subset of the Tango SDK, you can see in the Tango documentation that a directional light should have a white color (instead of the default yellow/orange one) and no shadows.

Next, make sure you include the Environmental Light prefab into your scene. It only contains the “EnvironmentalLight.cs” script, which is responsible for continuously reading the light estimate from the frame and applying it to the global float variable which the shaders can read.

Finally, you need to assign a shader to your object, which uses this global float _GlobalLightEstimation as part of the color calculation. The ARCore SDK for Unity currently comes with two such shaders – one for diffuse and one for specular lighting.

How does the Shader use the Light Estimate?

To learn more about the generic function of shaders, read the helpful article Unity – A Gentle Introduction to Shaders. For this blog post, I’ll examine the DiffuseWithLightEstimation shader, which is part of the ARCore example. It’s written in Cg, which is also known as HLSL – the most commonly used shading language in Unity.

The shader extends the texture-based shader from the Unity surface shader example. The main difference is the addition of the _GlobalLightEstimation parameter for adapting the standard behavior.

Basic Shader Setup

The shader has only one property: _MainTex . It allows setting the texture as a parameter for the material. If no 2D texture is defined, the default is a simple white color. In my app, I’m not using a texture so far – the brain model is segmented from a real MRI image, which doesn’t contain color information.

Properties

{

_MainTex ("Base (RGB)", 2D) = "white" {}

}

The following SubShader part contains the actual implementation of the shader. First, it sets additional configuration for Unity. For this shader, it configures the “RenderType” as “Opaque”. This is most common for a normal shader where the object is used on a standard object and not transparent.

SubShader

{

Tags { "RenderType"="Opaque" }

Most parts of the shader code itself correspond to a simple standard shader. The texture defined in the Unity material is made accessible through the uv_MainTex property contained in the Input struct.

struct Input

{

float2 uv_MainTex;

};

The Input struct is later retrieved as the first parameter of the actual surf surface shader code. The color from the texture is directly set to the Albedo property of the material. If (like in my example) no texture is assigned, the color is simply white as defined in the material properties at the beginning.

void surf (Input IN, inout SurfaceOutput o)

{

fixed4 c = tex2D(_MainTex, IN.uv_MainTex);

o.Albedo = c.rgb;

o.Alpha = c.a;

}

The #pragma definition reveals that the shader is using the Lambertian lighting model. It’s an approximation for rough surfaces (without specular highlights / glossy areas). As a model for diffuse reflection, the polygons of our model reflect the light equally in all directions.

#pragma surface surf Lambert noforwardadd finalcolor:lightEstimation

In addition, the #pragma enables the noforwardadd setting, which disables the Forward Rendering Path. From the Unity documentation: “This makes the shader support one full directional light, with all other lights computed per-vertex/SH. Makes shaders smaller as well.” – fine for our scene, as we only have one directional light.

Adding the Global Light Estimation

Where the shader differentiates from a run-of-the-mill surface shader is the addition of the color modification based on the global light estimation calculated by ARCore.

This is added by the finalcolor definition in the #pragma part of the shader. It adds a custom final color modification function – in this shader implementation called lightEstimation. The details are explained in the Surface Shader Examples manual of Unity.

The lightEstimation method adjusts the color intensity using a simple multiplication. Remember that the light estimate we get from ARCore has a max of 1.0 (for a bright white environment) and 0.0 (for a dark black environment).

void lightEstimation(Input IN, SurfaceOutput o, inout fixed4 color)

{

color *= _GlobalLightEstimation;

}

Modifying the color in this final step is different to if we would have applied it in the surface shader to the albedo. If we modify the final color, it also affects additional influences on the color that might be added by lightmaps, light probes or other similar external sources.

Conclusion

Digging into the code of the ARCore’s Light Estimation revealed the following:

- The estimation is simply the average luminosity of the whole captured scene from the color camera of the phone. 1.0 = white, 0.0 = black.

- It isn’t related to the actual brightness of the room you’re in, but depends on how the camera is perceiving it through its exposure settings.

- There is only one global light estimation value for the whole scene. You can’t adjust your object according to the specific regional brightness where your object is placed.

- The shader of the HelloARExample multiplies the object color with the global light estimate. This adapts (and usually reduces) the RGB brightness of the final surface color.

While the results are not accurate for the specific position of the virtual object in the Augmented Reality scene, this simple approach still helps a lot to make the overall impression of the scene more realistic. The brightness of the hologram generally fits in well with the overall screen brightness.

Want to test the code yourself? Download the source code from GitHub.