Realistically merging virtual objects with the real world in Augmented Reality has a few challenges. The most important:

- Realistic positioning, scale and rotation

- Lighting and shadows that match the real-world illumination

- Occlusion with real-world objects

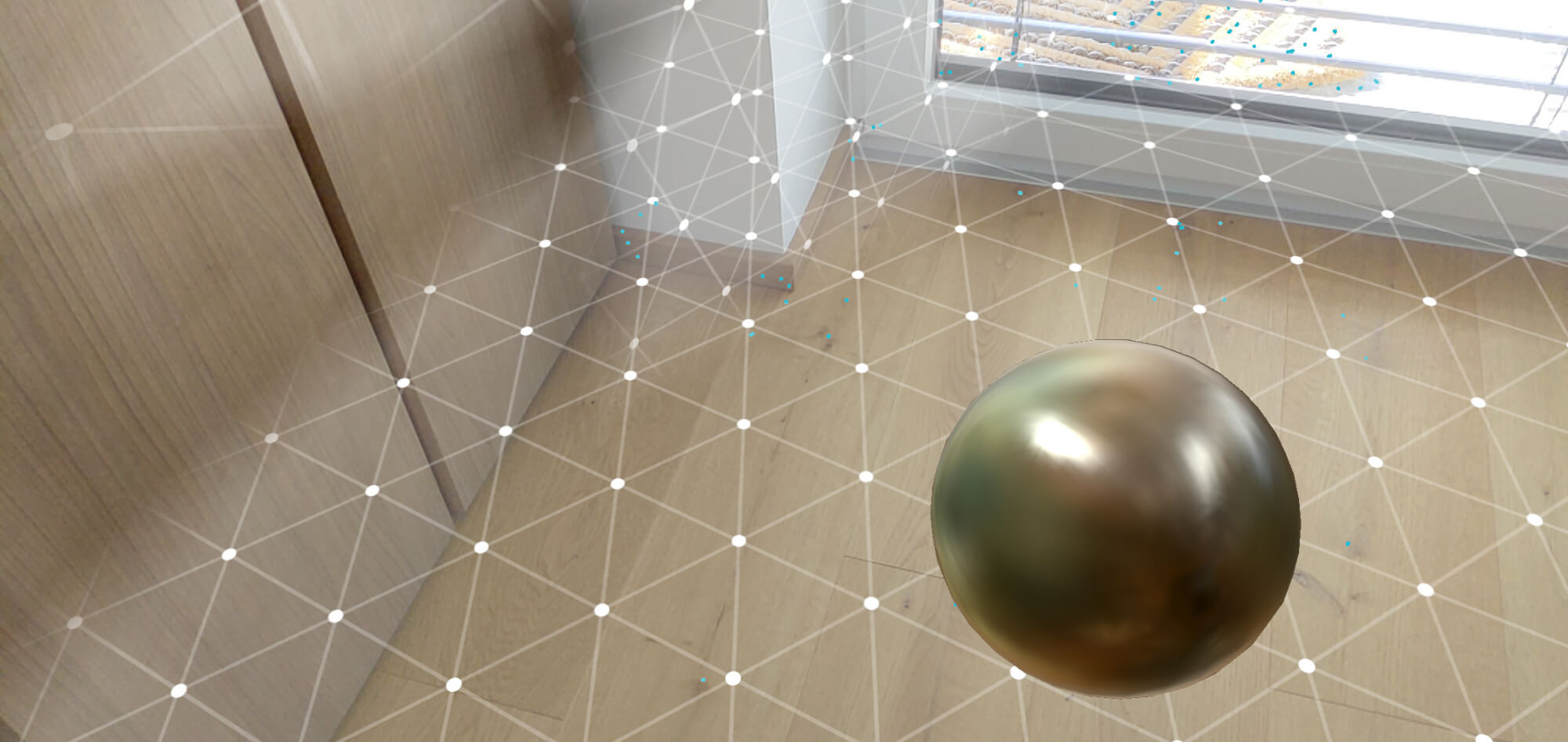

The first is working very well in today’s AR systems. Number 3 for occlusion is working OK on the Microsoft HoloLens; and it’s soon also coming to ARCore (a private preview is currently running through the ARCore Depth API – which is probably based on the research by Flynn et al. ).

But what about the second item? Google put a lot of effort into this recently. So, let’s look behind the scenes. How does ARCore estimate HDR (high dynamic range) lighting and reflections from the camera image?

Remember that ARCore needs to scale to a variety of smartphones; thus, a requirement is that it also works on phones that only have a single RGB camera – like the Google Pixel 2.

Light Estimation

The goal is simple: realistic HDR lighting for virtual objects. Ideally, this should work from low dynamic range source images – as the real-time camera stream that feeds into smartphone-based AR systems can’t capture HDR lighting. The less source material the algorithm requires to predict the lighting, the better; a single frame would of course be ideal. Is that possible?

In the publication Learning to predict indoor illumination from a single image by Gardner et al. , they showed some impressive results estimating the light source from a normal photo and applying a similar lighting situation to the virtual objects. This affects both the location of lights as well as their intensity. The underlying algorithm includes a deep convolutional neural network.

Lighting Cues and Concepts

In the Google AR developer documentation, they highlight several important properties that need to be correctly simulated to blend virtual and real objects:

- Specular highlights: shiny bits of surfaces; move with the viewer

- Shadows: layout of scene & where lights come from

- Shading: surface + light influences reflection

- Reflection: reflected colors

All these properties directly influence the color and brightness of each pixel in an image. On the one hand side, the AR engine needs to work with these inputs to estimate the light and material properties. On the other hand, similar settings then have to be applied to the virtual objects.

Human Perception & Lighting

A key fact to keep in mind is that we as humans usually only indirectly perceive the light field. We can only see object appearances and use our experience to infer the source.

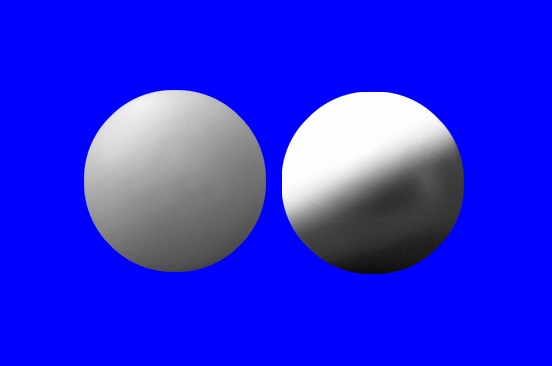

The question is: how good are we doing this? Several studies tried to find this out. te Pas et al. asked humans to judge images of two spheres to answer three questions:

- Are they the same material?

- Are they illuminated the same way?

- Is illumination or material the same?

The spheres were either photographs or computer-generated. This shows an example of what users had to judge in the experiment:

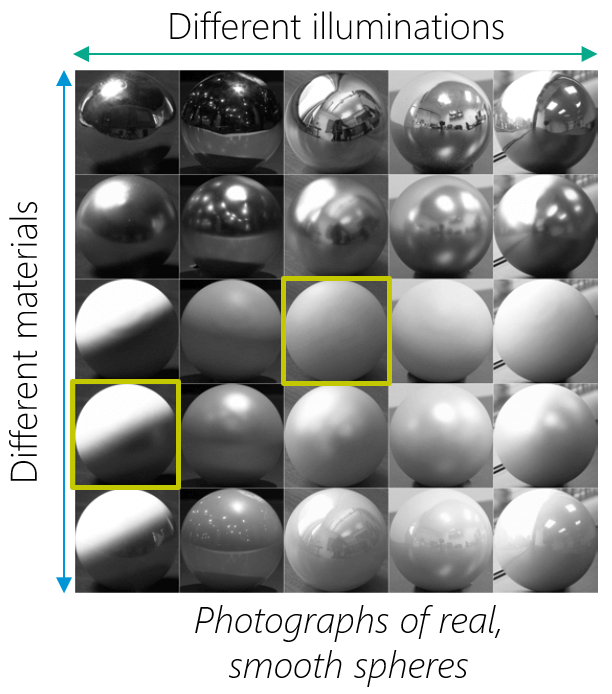

In the image above, both spheres are from real photos and not computer generated. However, they differ both in illumination and material. Not easy to judge, right? It gets a bit clearer once you look at the images of the test set with a little more context, as seen here:

What were the results of the study? They recommend the following:

- To make correct material perception possible, include higher order aspects of the light field and apply a realistic 3D texture (meso scale texture).

- To make a correct perception of the light field possible, you need to put emphasis on the realism of global light properties (in particular its mean direction & diffuseness).

Article Series

How is it possible to computationally perceive the light field of a scene? This will be covered in the second part of the article series. Finally, in the third part, I’ll show an example of how you can visualize ARCore’s reflection map in a Unity scene.